Real estate API for a search-to-rent application

Let's take a deep dive into an OpenAPI for a search-to-rent application focused on the Canadian real estate market. The tech stack is Bun, Hono, Drizzle, PlanetScale, Algolia, and CloudAMQP.

February 22, 2024

Objective

The focus of this demo is to prepare the backend of a search-to-rent front end using Algolia's advanced search features while making use of the latest technologies in the JavaScript serverless world. For the time being, it is out of scope to deploy the app and make it publicly available. This is why you will not find authentication or authorization. If you are also interested in that feature, you can find examples in Hono's documentation or in any other existing authentication service documentation of your liking. You can even find here one of my older articles describing exactly that.

Update: new article published on how to add Clerk authentication and authorization

What is covered

Code repository

Online resources

- Bun is a fast JavaScript all-in-one toolkit

- Hono - Ultrafast web framework for the Edges

- Drizzle ORM - TypeScript ORM with top-notch performance, lightweight and edge ready

- PlanetScale - the world's most advanced MySQL platform

- Algolia - The one-stop shop for AI search

- CloudAMQP - Queue starts here. Managing the largest fleet of RabbitMQ and LavinMQ clusters worldwide

- Zod - TypeScript-first schema validation with static type inference

- Postman - Build APIs together. Over 30 million developers use Postman

- Pexels - The best free stock photos, royalty free images & videos shared by creators

Drizzle models and relations

Please take a look at the README.md file first to be able to set up the environment variables. You can access the editable Draw.io entity relationship diagram here. all table is only used for search testing purposes, to be able to compare with results coming from Algolia. The Drizzle models are defined inside of the /src/models/schemas folder.

The SQL schema for the models and the relations between them are declared directly in TypeScript. Let's take media and mediaType, for example, the second one being the parent. media is related to one mediaType and to one property record. In turn, mediaType is related to many media records /src/models/schemas/media.ts.

import { createId } from '@paralleldrive/cuid2';

import { relations } from 'drizzle-orm';

import { int, mysqlTable, timestamp, varchar } from 'drizzle-orm/mysql-core';

import { property } from './property';

export const media = mysqlTable('media', {

id: varchar('id', { length: 128 })

.$defaultFn(() => createId())

.primaryKey(),

assetId: varchar('asset_id', { length: 256 }).notNull(),

mediaTypeId: varchar('media_type_id', { length: 128 }).notNull(),

propertyId: varchar('property_id', { length: 128 }).notNull(),

order: int('order').notNull(),

createdAt: timestamp('created_at').defaultNow().notNull(),

updatedAt: timestamp('updated_at').defaultNow().notNull(),

});

export const mediaMediaTypeRelations = relations(media, ({ one }) => ({

mediaType: one(mediaType, {

fields: [media.mediaTypeId],

references: [mediaType.id],

}),

}));

export const mediaPropertyRelations = relations(media, ({ one }) => ({

property: one(property, {

fields: [media.propertyId],

references: [property.id],

}),

}));

export const mediaType = mysqlTable('media_type', {

id: varchar('id', { length: 128 })

.$defaultFn(() => createId())

.primaryKey(),

name: varchar('name', { length: 256 }).notNull().unique(),

createdAt: timestamp('created_at').defaultNow().notNull(),

updatedAt: timestamp('updated_at').defaultNow().notNull(),

});

export const mediaTypeMediaRelations = relations(mediaType, ({ many }) => ({

media: many(media),

}));

Many-to-many relations are declared via an intermediary model like featureToProperty /src/models/schemas/feature.ts. Both related models feature and property are involved in one-to-many relations with this intermediary featureToProperty model.

import { createId } from '@paralleldrive/cuid2';

import { relations } from 'drizzle-orm';

import {

int,

mysqlTable,

primaryKey,

timestamp,

varchar,

} from 'drizzle-orm/mysql-core';

import { property } from './property';

export const feature = mysqlTable('feature', {

id: varchar('id', { length: 128 })

.$defaultFn(() => createId())

.primaryKey(),

name: varchar('name', { length: 256 }).notNull().unique(),

order: int('order').notNull(),

createdAt: timestamp('created_at').defaultNow().notNull(),

updatedAt: timestamp('updated_at').defaultNow().notNull(),

});

export const featurePropertyRelations = relations(feature, ({ many }) => ({

featureToProperty: many(featureToProperty),

}));

export const featureToProperty = mysqlTable(

'feature_to_property',

{

featureId: varchar('feature_id', { length: 128 }).notNull(),

propertyId: varchar('property_id', { length: 128 }).notNull(),

},

(t) => ({

pk: primaryKey({

name: 'feature_to_property_pk',

columns: [t.featureId, t.propertyId],

}),

}),

);

export const featureToPropertyRelations = relations(

featureToProperty,

({ one }) => ({

feature: one(feature, {

fields: [featureToProperty.featureId],

references: [feature.id],

}),

property: one(property, {

fields: [featureToProperty.propertyId],

references: [property.id],

}),

}),

);

Drizzle provides you the most SQL-like way to fetch data from your database, while remaining type-safe and composable. It natively supports mostly every query feature and capability of every dialect, and whatever it doesn’t support yet, can be added by the user with the powerful sql operator.

Inside of the /src/models/preparedStatements folder, for example, you can see two ways of fetching data, one using query /src/models/preparedStatements/preparedUnit.ts and the other using select /src/models/preparedStatements/preparedImagesByPropertyId.ts.

import { sql } from 'drizzle-orm';

import { db } from '@/models';

const preparedUnit = db.query.unit

.findFirst({

columns: {

id: true,

propertyId: true,

floorPlanId: true,

name: true,

rent: true,

deposit: true,

available: true,

immediate: true,

availableDate: true,

shortterm: true,

longterm: true,

unitNumber: true,

unitName: true,

surface: true,

furnished: true,

heat: true,

water: true,

electricity: true,

internet: true,

television: true,

order: true,

published: true,

},

where: (unit, { eq }) => eq(unit.id, sql.placeholder('id')),

with: {

bedroom: {

columns: {

name: true,

},

},

bathroom: {

columns: {

name: true,

},

},

},

})

.prepare();

export default preparedUnit;

import { and, eq, sql } from 'drizzle-orm';

import { db } from '@/models';

import { media, mediaType } from '@/models/schema';

import { insertMediaTypeSchemaExample } from '@/models/zodSchemas';

let whereClause = and(

eq(media.propertyId, sql.placeholder('propertyId')),

eq(mediaType.name, 'image'),

);

if (process.env.BUN_ENV && process.env.BUN_ENV === 'algolia') {

whereClause = and(

eq(media.propertyId, sql.placeholder('propertyId')),

eq(mediaType.name, insertMediaTypeSchemaExample.name),

);

}

const preparedImagesByPropertyId = db

.select({ id: media.id, assetId: media.assetId })

.from(media)

.innerJoin(mediaType, eq(media.mediaTypeId, mediaType.id))

.where(whereClause)

.orderBy(media.order)

.prepare();

export default preparedImagesByPropertyId;

There are two ways of inferring a type from a model schema. One directly from the model typeof city.$inferSelect or typeof city.$inferInsert, the other via z.infer<typeof citySelectSchema> or z.infer<typeof cityInsertSchema>, those Zod schemas being created by createSelectSchema and createInsertSchema functions provided by drizzle-zod package. See /src/models/zodSchemas/city.ts. The main difference between the two methods is how you can apply transformations to the Zod schema and then infer the transformed type.

Database seeding using scraped Wikipedia data and Faker

Database seeding required multiple steps to be able to generate over 24K property units for the Algolia search index. The first step was to scrape Wikipedia to get data for almost 200 communities in Calgary, Alberta, Canada, related to sector, quadrant, population, dwellings, immigrants, income, and percent of properties used for renting. Take a look at /src/utils/db/seed/wiki/scrapeCommunities.ts and at /src/utils/db/seed/wiki/scrapeCommunity.ts and /src/utils/db/seed/wiki/scrapeGeohackCoords.ts.

The second step was to generate addresses based on location coordinates. I used Faker to randomly generate coordinates inside a bounding box around the Calgary city center. Based on those, I used the Google Maps geocoder reverse API to find a real address for a particular coordinate point. See /src/utils/db/seed/gmaps/generateAddresses.ts.

The third step was to generate property records using Faker and many distribution biases for each field of the property record /src/utils/db/seed/distribution/index.ts. The realism of the faked data stops at assigning a proportional rent based on the features, building age or location. Even with this limitation, the data is suitable to simulate the load.

Drizzle Studio, Drizzle Kit, and migrations

To be able to connect to your PlanetScale database with Drizzle Kit and Drizzle Studio, take a look at /drizzle.config.ts:

import type { Config } from 'drizzle-kit';

export default {

schema: './src/models/schema.ts',

out: './drizzle',

driver: 'mysql2',

dbCredentials: {

uri: process.env.DATABASE_URI as string,

},

verbose: true,

strict: true,

} satisfies Config;

Drizzle Studio is a new way for you to explore SQL database on Drizzle projects. Drizzle studio grabs your drizzle config file, connects to your database and lets you browse, add, delete and update everything based on your existing drizzle sql schema.

You have a few scripts at your disposal to work with Drizzle Kit. You can use db:push at the beginning of the project and db:generate:migration plus db:migrate later. The way Drizzle migrations work is you only need to edit your schema definitions, and db:generate:migration will pick that up and generate migration files inside of /drizzle folder. If you decide to reverse a migration, you only have to undo the schema definition and then generate another migration.

"db:push": "drizzle-kit push:mysql --config=drizzle.config.ts",

"db:generate:migration": "drizzle-kit generate:mysql --config=drizzle.config.ts",

"db:migrate": "bun ./src/utils/db/migrate.ts"

Inside of /src/utils/db/migrate.ts you have to let the Drizzle migrator for PlanetScale know where the db connection and the migrations folder are.

API route and route handler CRUD factories

Hono routes and routes handlers are defined simply like this, but we need to use Zod OpenAPI Hono package to be able to generate the documentation and the specification for the API on the fly.

app.get('/', (c) => c.text('GET /'))

app.post('/', (c) => c.text('POST /'))

app.put('/', (c) => c.text('PUT /'))

app.delete('/', (c) => c.text('DELETE /'))

So, the routes and route handlers have to be created like this:

import { createRoute, OpenAPIHono } from '@hono/zod-openapi'

const app = new OpenAPIHono();

const route = createRoute({

method: 'get',

path: '/users/{id}',

request: {

params: ParamsSchema,

},

responses: {

200: {

content: {

'application/json': {

schema: UserSchema,

},

},

description: 'Retrieve the user',

},

},

});

app.openapi(route, (c) => {

const { id } = c.req.valid('param')

return c.json({

id,

age: 20,

name: 'Ultra-man',

})

});

Inside the /src/routes folder, you can see how each route group is generated using route and route handler factories and later collected and assembled into the general router of the app. The city routes group looks like this /src/routes/city/index.ts:

import { OpenAPIHono } from '@hono/zod-openapi';

import { postmanIds } from '@/constants';

import {

customInsertCityCheck,

deleteItemHandler,

postCreateItemHandler,

postListItemHandler,

putUpdateItemHandler,

} from '@/controllers';

import { zodDefaultHook } from '@/middlewares';

import { city, community, property } from '@/models/schema';

import {

InsertCitySchema,

insertCitySchema,

insertCitySchemaExample,

selectCitySchema,

updatableCityFields,

UpdateCitySchema,

updateCitySchema,

updateCitySchemaExample,

} from '@/models/zodSchemas';

import { NomenclatureTag } from '@/types';

import { createBodyDescription, getModelFields } from '@/utils';

import {

bodyCityListSchema,

cityBodySchemaExample,

getCityCursorValidatorByOrderBy,

paginationCityOrderSchema,

} from '@/validators';

import {

deleteItem,

postCreateItem,

postListItem,

putUpdateItem,

} from '../common';

const app = new OpenAPIHono({

defaultHook: zodDefaultHook,

});

const { fields } = getModelFields(city);

app.openapi(

postListItem({

tag: NomenclatureTag.City,

selectItemSchema: selectCitySchema,

paginationItemOrderSchema: paginationCityOrderSchema,

bodyItemListSchema: bodyCityListSchema,

bodyItemListSchemaExample: cityBodySchemaExample,

bodyDescription: createBodyDescription(fields),

}),

postListItemHandler({

model: city,

getItemCursorValidatorByOrderBy: getCityCursorValidatorByOrderBy,

paginationItemOrderSchema: paginationCityOrderSchema,

}),

);

app.openapi(

postCreateItem({

tag: NomenclatureTag.City,

insertItemSchema: insertCitySchema,

insertItemSchemaExample: insertCitySchemaExample,

}),

postCreateItemHandler<InsertCitySchema>({

model: city,

customCheck: customInsertCityCheck,

postmanId: postmanIds.city,

}),

);

app.openapi(

putUpdateItem({

tag: NomenclatureTag.City,

updateItemSchema: updateCitySchema,

updateItemSchemaExample: updateCitySchemaExample,

postmanId: postmanIds.city,

}),

putUpdateItemHandler<UpdateCitySchema>({

model: city,

tag: NomenclatureTag.City,

updatableFields: updatableCityFields,

customCheck: customInsertCityCheck,

}),

);

app.openapi(

deleteItem({

tag: NomenclatureTag.City,

postmanId: postmanIds.city,

}),

deleteItemHandler({

model: city,

tag: NomenclatureTag.City,

idField: 'id',

children: [

{

model: community,

tag: NomenclatureTag.Community,

parentIdField: 'cityId',

},

{

model: property,

tag: NomenclatureTag.Property,

parentIdField: 'cityId',

},

],

}),

);

export default app;

Take a look at the routes factories inside of /src/routes/common folder and route handler factories inside of the /src/controllers/common folder.

The putUpdateItem route factory looks like this /src/routes/common/putUpdateItem.ts:

import { createRoute, z } from '@hono/zod-openapi';

import {

CommonUpdateSchema,

CommonUpdateSchemaExample,

NomenclatureTag,

} from '@/types';

import { errorSchema } from '@/validators';

export const successSchema = z.object({

success: z.literal(true),

data: z.object({ id: z.string() }),

});

const putUpdateItem = ({

tag,

updateItemSchema,

updateItemSchemaExample,

postmanId,

}: {

tag: NomenclatureTag;

updateItemSchema: CommonUpdateSchema;

updateItemSchemaExample: CommonUpdateSchemaExample;

postmanId: string;

}) =>

createRoute({

method: 'put',

path: '/update/{id}',

tags: [tag],

request: {

params: z.object({

id: z.string().openapi({ example: postmanId }),

}),

body: {

description: `<p>Update a ${tag} object.</p>`,

content: {

'application/json': {

schema: updateItemSchema,

example: updateItemSchemaExample,

},

},

required: true,

},

},

responses: {

200: {

description: `Responds with the id of the updated ${tag}.`,

content: {

'application/json': {

schema: successSchema,

},

},

},

400: {

description: 'Responds with a bad request error message.',

content: {

'application/json': {

schema: errorSchema,

},

},

},

},

});

export default putUpdateItem;

The putUpdateItemHandler route handler looks like this /src/controllers/common/putUpdateItemHandler.ts:

import { z } from '@hono/zod-openapi';

import { eq } from 'drizzle-orm';

import { Context } from 'hono';

import intersection from 'lodash.intersection';

import pick from 'lodash.pick';

import without from 'lodash.without';

import { db } from '@/models';

import {

CommonModel,

CommonSelectItemSchemaType,

CommonUpdateItemSchema,

NomenclatureTag,

} from '@/types';

import { badRequestResponse, getModelFields } from '@/utils';

const putUpdateItemHandler =

<UpdateItemSchema>({

model,

tag,

updatableFields,

customCheck,

onSuccess,

}: {

model: CommonModel;

tag: NomenclatureTag;

updatableFields: string[];

customCheck?: (

body: UpdateItemSchema,

id?: string,

tag?: NomenclatureTag,

) => Promise<string | null>;

onSuccess?: (

id: string,

newValues: CommonUpdateItemSchema & { updatedAt: Date },

oldValues: CommonSelectItemSchemaType,

) => Promise<void>;

}) =>

async (c: Context) => {

const id = c.req.param('id');

const body: UpdateItemSchema = await c.req.json();

const { required, optional } = getModelFields(model);

const mandatory = without(required, 'id');

const fields = [...mandatory, ...optional];

if (

!body ||

Object.keys(body).length === 0 ||

Object.keys(body).length > updatableFields.length ||

intersection(Object.keys(body), updatableFields).length === 0

) {

return c.json(

badRequestResponse({

reason: 'validation error',

message: `body must contain valid ${updatableFields.join(' or ')} or all`,

path: updatableFields,

}),

400,

);

}

if (customCheck) {

const customCheckError = await customCheck(body, id, tag);

if (customCheckError) {

return c.json(JSON.parse(customCheckError), 400);

}

}

const existingItem = await db.select().from(model).where(eq(model.id, id));

if (!existingItem.length) {

return c.json(

badRequestResponse({

reason: 'validation error',

message: `${tag} with id ${id} does not exist`,

path: ['id'],

}),

400,

);

}

const newValues = {

...pick(pick(body, fields), updatableFields),

updatedAt: new Date(),

};

await db.update(model).set(newValues).where(eq(model.id, id));

if (onSuccess) {

await onSuccess(

id,

newValues,

existingItem[0] as unknown as CommonSelectItemSchemaType,

);

}

return c.json({ success: z.literal(true).value, data: { id } }, 200);

};

export default putUpdateItemHandler;

Zod validators and custom code generator using EJS

Zod is key all over the application, whether to validate route query parameters, IDs, body, or to infer types. As seen above, the route handler factories are also making use of the Zod schemas. Inside of the /src/models/schemas you can find select, insert, update schemas, types, and valid examples for the body of insert and update. Those examples are used by the API documentation and by the tests generated for Postman.

The main location for these schemas is the /src/validators folder. In there, you can find files with "List" in their names. Those files were generated for each model with the purpose of validating the body with the logical operations for filtering inside the listing [POST] endpoints. The other files contain ad-hoc shema definitions and examples for the logical operations for filtering.

/src/validators/codegen folder contains the CLI command to generate the code for Zod "List" schemas and the EJS template for code generation /src/validators/codegen/list/template.ejs. These schemas are useful not only for each model validation but also to respond with specific, easy to understand error messages.

Query object for data filtering, cursor pagination, and order by

Every [POST] endpoint for data listing is packed with features for each model: a flexible filtering schema with logical operations, sorting ascending and descending by every field, and cursor-based pagination. On top of that, the [POST] /search endpoint allows you to specify the fields for the response objects.

{

"and": [

["eq", "smoking", 1],

["between", "rent", 1000, 1200],

["like", "address", "Crescent"],

["or",

[

["eq", "cats", 1],

["eq", "dogs", 1]

]

],

["aroundLatLng", 50.9573828, -114.084153, 1000]

],

"fields": [

"listingId",

"propertyId",

"rent",

"immediate",

"imageId",

"smoking",

"cats",

"dogs"

]

}

The route handler for these endpoints is /src/controllers/common/postListItemHandler.ts. Parsing the filtering object and conversion to Drizzle query builder is done by /src/controllers/common/convertBodyToQBuilder.ts:

import { between, eq, gt, lt, or, SQL, sql } from 'drizzle-orm';

import { CommonItemListSchema, CommonModel, ModelField } from '@/types';

import { dateIsoToDatetime } from '@/utils';

const mapOperations = (

// eslint-disable-next-line @typescript-eslint/no-explicit-any

model: any,

ops: CommonItemListSchema,

fields: ModelField,

) => {

const args: (SQL<unknown> | undefined)[] = [];

ops.forEach((item) => {

if (

item[0] === 'eq' &&

(fields.id.includes(item[1]) ||

fields.string.includes(item[1]) ||

fields.numeric.includes(item[1]) ||

fields.tinyInt.includes(item[1]) ||

fields.decimal.includes(item[1]))

) {

args.push(eq(model[item[1]], item[2]));

}

if (item[0] === 'lt' && fields.numeric.includes(item[1])) {

args.push(lt(model[item[1]], parseInt(`${item[2]}`)));

}

if (item[0] === 'lt' && fields.decimal.includes(item[1])) {

args.push(lt(model[item[1]], parseFloat(`${item[2]}`)));

}

if (item[0] === 'gt' && fields.numeric.includes(item[1])) {

args.push(gt(model[item[1]], parseInt(`${item[2]}`)));

}

if (item[0] === 'gt' && fields.decimal.includes(item[1])) {

args.push(gt(model[item[1]], parseFloat(`${item[2]}`)));

}

if (item[0] === 'between' && fields.numeric.includes(item[1])) {

args.push(

between(model[item[1]], parseInt(`${item[2]}`), parseInt(`${item[3]}`)),

);

}

if (item[0] === 'between' && fields.decimal.includes(item[1])) {

args.push(

between(

model[item[1]],

parseFloat(`${item[2]}`),

parseFloat(`${item[3]}`),

),

);

}

if (item[0] === 'lt' && fields.datetime.includes(item[1])) {

args.push(sql`${model[item[1]]} < ${dateIsoToDatetime(`${item[2]}`)}`);

}

if (item[0] === 'gt' && fields.datetime.includes(item[1])) {

args.push(sql`${model[item[1]]} > ${dateIsoToDatetime(`${item[2]}`)}`);

}

if (item[0] === 'between' && fields.datetime.includes(item[1])) {

args.push(

sql`${model[item[1]]} BETWEEN ${dateIsoToDatetime(`${item[2]}`)} AND ${dateIsoToDatetime(`${item[3]}`)}`,

);

}

if (item[0] === 'lt' && fields.dateOnly.includes(item[1])) {

args.push(sql`${model[item[1]]} < ${item[2]}`);

}

if (item[0] === 'gt' && fields.dateOnly.includes(item[1])) {

args.push(sql`${model[item[1]]} > ${item[2]}`);

}

if (item[0] === 'between' && fields.dateOnly.includes(item[1])) {

args.push(sql`${model[item[1]]} BETWEEN ${item[2]} AND ${item[3]}`);

}

});

return args;

};

const convertBodyToQBuilder = (

model: CommonModel,

and: CommonItemListSchema,

fields: ModelField,

): SQL<unknown>[] | null => {

const args: (SQL<unknown> | undefined)[] = [];

const orOps = and.filter((item) => item[0] === 'or');

if (orOps.length) {

orOps.forEach((item) => {

const orArgs = mapOperations(

model,

item[1] as CommonItemListSchema,

fields,

);

args.push(or(...orArgs));

});

}

const restArgs = mapOperations(model, and, fields);

args.push(...restArgs);

const filteredArgs = args.filter((item) => typeof item !== 'undefined');

if (filteredArgs.length === 0) {

return null;

}

return filteredArgs as SQL<unknown>[];

};

export default convertBodyToQBuilder;

Middleware and services

The middleware is located inside the /src/middlewares folder and is doing validation, logging, errors interception, and transforming them in a uniform manner. Let's take a look at /src/middlewares/httpExceptionHandler.ts in charge with Hono exceptions parsing:

import { Context } from 'hono';

import {

badRequestResponse,

getCodeDescriptionPath,

hasStatusNameBody,

} from '@/utils';

const httpExceptionHandler = async (

// eslint-disable-next-line @typescript-eslint/no-explicit-any

err: any,

c: Context,

) => {

if (typeof err.getResponse !== 'undefined') {

const response = err.getResponse();

if (response instanceof Response) {

const body = await response.text();

if (body && body === 'Malformed JSON in request body') {

return c.json(

badRequestResponse({

reason: 'validation error',

message: 'malformed json in request body',

path: ['body'],

}),

400,

);

}

return response;

}

}

if (

hasStatusNameBody(err) &&

err.status === 400 &&

err.name === 'DatabaseError' &&

err.body &&

err.body.message

) {

const result = getCodeDescriptionPath(err.body.message);

if (result) {

return c.json(

badRequestResponse({

reason: result.code,

message: result.description,

path: [result.path],

}),

400,

);

}

}

return c.json(

badRequestResponse({

reason: 'internal server error',

message: err.message || `${err}`,

path: ['unknown'],

}),

500,

);

};

export default httpExceptionHandler;

/src/middlewares/zodDefaultHook.ts is not a Hono middleware but a Zod OpenAPI hook that is performing a similar function:

/* eslint-disable @typescript-eslint/no-explicit-any */

import { Hook, z } from '@hono/zod-openapi';

import { Env } from 'hono';

const zodDefaultHook: Hook<any, Env, any, any> = (result, c) => {

if (!result.success && result.error && result.error.issues) {

const unionErrors = result.error.issues.filter((issue) =>

issue.code.includes('invalid_union'),

);

if (unionErrors.length) {

const errors = unionErrors[0] as z.ZodInvalidUnionIssue;

const issues = errors.unionErrors.map((error) =>

error.issues.map((issue) => ({

message: issue.message.toLowerCase(),

path: issue.path,

})),

);

return c.json(

{

success: z.literal(false).value,

error: {

reason: 'validation error',

issues,

},

},

400,

);

}

return c.json(

{

success: z.literal(false).value,

error: {

reason: 'validation error',

issues: result.error.issues.map((issue) => ({

message: issue.message.toLowerCase(),

path: issue.path,

})),

},

},

400,

);

}

};

export default zodDefaultHook;

Testing endpoints using Postman CLI and Bun

The nice thing about OpenAPI is not only that it is very useful for developers, but that it can also be parsed by client code generators or test runners. I have used the excellent package Portman CLI to parse the API specification JSON file to generate the Postman importable collection JSON. While doing that, it did also inject contract tests. From there you can access all endpoints for manual testing and even to generate the Postman CLI command you need for automated tests. Take a look at the /spec folder and make use of the real-estate-api-demo.postman_collection.json file by importing it into your Postman application.

For test automation using Postman I have built a custom tester as a parent process that is spawning children processes for the server and Postman CLI /src/routes/tests/startAppAndRunPostmanTests.ts:

/* eslint-disable no-console */

import { sleep } from 'bun';

import logSymbols from 'log-symbols';

let honoPid: number | null = null;

let isSuccessful = true;

const procServer = Bun.spawn(['bun', 'run', 'start:postman:test']);

const procPostman = Bun.spawn(['bun', 'run', 'postman:test:suite']);

const killChildren = async () => {

if (honoPid) {

process.kill(honoPid);

}

procPostman.kill();

await procPostman.exited;

procServer.kill();

await procServer.exited;

console.log(logSymbols.success, 'All children processes have been killed');

};

process.on('SIGINT', async () => {

console.log(

`\n${logSymbols.info} Ctrl-C was pressed. Killing children processes...`,

);

await killChildren();

process.exit(0);

});

const streamServer = new WritableStream({

write: async (chunk) => {

const str = new TextDecoder().decode(chunk);

if (str.indexOf('hono pid: ') !== -1) {

honoPid = parseInt(str.split('hono pid: ')[1], 10);

}

},

});

const streamPostman = new WritableStream({

write: async (chunk) => {

const str = new TextDecoder().decode(chunk);

console.log(str);

if (str.indexOf('failure') !== -1) {

isSuccessful = false;

}

if (

str.indexOf('You can view the run data in Postman at: https://') !== -1

) {

await sleep(1000);

await killChildren();

if (isSuccessful) {

console.log(logSymbols.success, 'Postman tests have passed');

process.exit(0);

} else {

console.log(logSymbols.error, 'Postman tests have failed');

process.exit(1);

}

}

},

});

procServer.stdout.pipeTo(streamServer);

procPostman.stdout.pipeTo(streamPostman);

Bun tests are located inside the same folder and they look like this /src/routes/tests/homeRoutes.test.ts:

import { expect, test } from 'bun:test';

import { SuccessSchema } from '@/routes/home/getHome';

import app from '@/server';

test('GET /', async () => {

const response = await app.request('/');

const body = (await response.json()) as SuccessSchema;

expect(response.status).toBe(200);

expect(body).toHaveProperty('message');

expect(body.message).toBe('real estate api demo');

});

Custom CLI stdout tester for asynchronous Algolia workers

Long story short, the workers in charge of updating the Algolia search index are functioning like this: a RabbitMQ consumer (/src/providers/rabbitmq/rabbitMqConsumer.ts) process is always watching a queue for messages published by specific actions that require updates to Algolia. That consumer is passing the messages to a dispatcher (/src/services/taskWorkers/index.ts), who in turn is assigning tasks to specialized workers. When a particular worker successfully finishes its mission it sends back to RabbitMQ an acknowledgement to clear the queue of that task. Otherwise, the task is never cleared from the queue.

import { Channel, Message } from 'amqplib';

import { taskPrefix, tasks } from '@/constants';

import { logger } from '@/services';

import { RabbitMqMessage } from '@/types';

import { featureWorker } from './feature';

import { mediaWorker } from './media';

import { parkingWorker } from './parking';

import {

propertyCreateWorker,

propertyDeleteWorker,

propertyUpdateWorker,

} from './property';

import { unitCreateWorker, unitDeleteWorker, unitUpdateWorker } from './unit';

const worker = (

rabbitMqMessage: RabbitMqMessage,

channel: Channel,

message: Message,

) => {

if (!rabbitMqMessage) {

logger.error(`${taskPrefix} undefined message cannot process task`);

return;

}

if (!rabbitMqMessage.type || !rabbitMqMessage.payload) {

logger.error(`${taskPrefix} invalid message cannot process task`);

return;

}

switch (rabbitMqMessage.type) {

case tasks.property.create:

propertyCreateWorker(

rabbitMqMessage,

channel,

message,

tasks.property.create,

);

break;

case tasks.property.update:

propertyUpdateWorker(

rabbitMqMessage,

channel,

message,

tasks.property.update,

);

break;

case tasks.property.delete:

propertyDeleteWorker(

rabbitMqMessage,

channel,

message,

tasks.property.delete,

);

break;

case tasks.media.create:

mediaWorker(rabbitMqMessage, channel, message, tasks.media.create);

break;

case tasks.media.update:

mediaWorker(rabbitMqMessage, channel, message, tasks.media.update);

break;

case tasks.media.delete:

mediaWorker(rabbitMqMessage, channel, message, tasks.media.delete);

break;

case tasks.parking.create:

parkingWorker(rabbitMqMessage, channel, message, tasks.parking.create);

break;

case tasks.parking.update:

parkingWorker(rabbitMqMessage, channel, message, tasks.parking.update);

break;

case tasks.parking.delete:

parkingWorker(rabbitMqMessage, channel, message, tasks.parking.delete);

break;

case tasks.feature.update:

featureWorker(rabbitMqMessage, channel, message, tasks.feature.update);

break;

case tasks.unit.create:

unitCreateWorker(rabbitMqMessage, channel, message, tasks.unit.create);

break;

case tasks.unit.update:

unitUpdateWorker(rabbitMqMessage, channel, message, tasks.unit.update);

break;

case tasks.unit.delete:

unitDeleteWorker(rabbitMqMessage, channel, message, tasks.unit.delete);

break;

default:

logger.error(`${taskPrefix} unknown task type ${rabbitMqMessage.type}`);

break;

}

};

export default worker;

Ok, so, how can we test all these processes that are working behind the scenes? How can we automate testing for something that is in charge of synchronizing changes with a third-party provider? I figured that I could parse the stdout of the CLI for success messages inside of logs related to Algolia. It is looking like this /src/services/taskWorkers/tests/startAppAndReadStdout.ts:

/* eslint-disable no-console */

import { sleep } from 'bun';

import { describe, expect, test } from 'bun:test';

import logSymbols from 'log-symbols';

import executeApiCalls from './executeApiCalls';

let honoPid: number | null = null;

const logs: string[] = [];

const proc = Bun.spawn(['bun', 'run', 'start:algolia:test']);

const killChildren = async () => {

if (honoPid) {

process.kill(honoPid);

}

proc.kill();

await proc.exited;

console.log(logSymbols.success, 'All children processes have been killed');

};

process.on('SIGINT', async () => {

console.log(

`\n${logSymbols.info} Ctrl-C was pressed. Killing children processes...`,

);

await killChildren();

process.exit(0);

});

const stream = new WritableStream({

write: async (chunk) => {

const str = new TextDecoder().decode(chunk);

if (str.indexOf('hono pid: ') !== -1) {

honoPid = parseInt(str.split('hono pid: ')[1], 10);

} else {

logs.push(str);

}

},

});

proc.stdout.pipeTo(stream);

describe('testing algolia workers', async () => {

await sleep(2000);

await executeApiCalls();

test('success task property.create', () => {

const tasksPropertyCreate = logs.filter(

(log) =>

log.indexOf(

'[TASK] success finished task property.create created 1 index objects',

) !== -1,

);

expect(tasksPropertyCreate.length).toBe(1);

});

test('success task property.delete', () => {

const tasksPropertyDelete = logs.filter(

(log) =>

log.indexOf(

'[TASK] success finished task property.delete deleted 1 index objects',

) !== -1,

);

expect(tasksPropertyDelete.length).toBe(1);

});

test('success task media.update', () => {

const tasksMediaUpdate = logs.filter(

(log) =>

log.indexOf(

'[TASK] success finished task media.update updated 1 index objects',

) !== -1,

);

expect(tasksMediaUpdate.length).toBe(1);

});

test('success task feature.update', () => {

const tasksFeatureUpdate = logs.filter(

(log) =>

log.indexOf(

'[TASK] success finished task feature.update updated 1 index objects',

) !== -1,

);

expect(tasksFeatureUpdate.length).toBe(1);

});

test('success task unit.create', () => {

const tasksUnitCreate = logs.filter(

(log) =>

log.indexOf(

'[TASK] success finished task unit.create created 1 index objects',

) !== -1,

);

expect(tasksUnitCreate.length).toBe(2);

});

test('success task unit.delete', () => {

const tasksUnitDelete = logs.filter(

(log) =>

log.indexOf(

'[TASK] success finished task unit.delete deleted 1 index objects',

) !== -1,

);

expect(tasksUnitDelete.length).toBe(2);

});

test('success task parking.update', () => {

const tasksParkingUpdate = logs.filter(

(log) =>

log.indexOf(

'[TASK] success finished task parking.update updated 1 index objects',

) !== -1,

);

expect(tasksParkingUpdate.length).toBe(2);

});

await sleep(2000);

await killChildren();

});

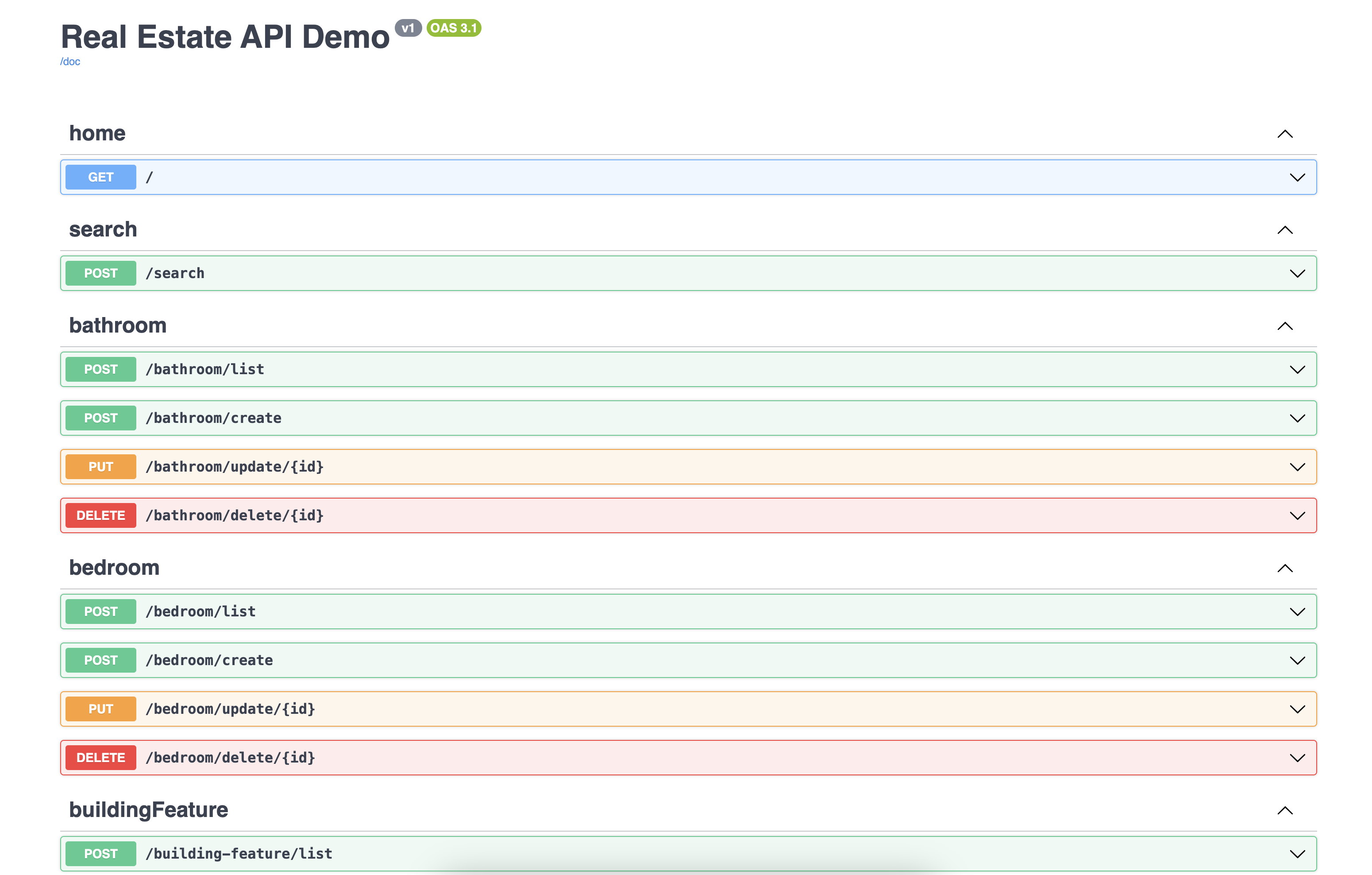

OpenAPI documentation and specification

Out of the box, you have two visual ways of issuing requests to the API: Postman and Swagger OpenAPI documentation. You can access the latter at http://localhost:3000/ui.

All the data related to endpoints, descriptions, request & response schemas, and valid examples are all part of OAS 3 standard specification JSON file accessible at http://localhost:3000/doc. That JSON spec file can also be found here: /spec.